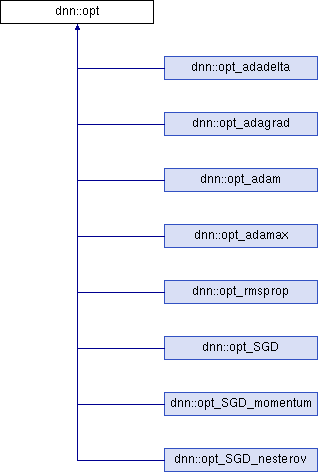

Optimizer base class. More...

#include <dnn_opt.h>

Public Member Functions | |

| opt () | |

| ~opt () | |

| virtual void | apply (arma::Cube< DNN_Dtype > &W, arma::Mat< DNN_Dtype > &B, const arma::Cube< DNN_Dtype > &Wgrad, const arma::Mat< DNN_Dtype > &Bgrad)=0 |

| Apply the optimizer to the layer parameters. More... | |

| virtual std::string | get_algorithm (void) |

| Get the optimizer algorithm information. More... | |

| void | set_learn_rate_alg (LR_ALG alg, DNN_Dtype a=0.0, DNN_Dtype b=10.0) |

| Set learning rate algorithm. More... | |

| void | update_learn_rate (void) |

| Update learning rate. More... | |

| DNN_Dtype | get_learn_rate (void) |

| Get the learning rate. More... | |

Protected Attributes | |

| std::string | alg |

| DNN_Dtype | lr |

| Learning rate. More... | |

| DNN_Dtype | reg_lambda |

| Regularisation parameter lambda. More... | |

| DNN_Dtype | reg_alpha |

| Elastic net mix parameter - 0=ridge (L2) .. 1=LASSO (L1) More... | |

| LR_ALG | lr_alg |

| Learning rate schedule algorithm. More... | |

| DNN_Dtype | lr_0 |

| Init value for lr. More... | |

| DNN_Dtype | lr_a |

| Internal parameter a. More... | |

| DNN_Dtype | lr_b |

| Internal parameter b. More... | |

| arma::uword | it |

| Iteration counter. More... | |

Detailed Description

Optimizer base class.

Implements the optimizer for finding the minimum of the cost function with respect to the layers trainable parameters

Constructor & Destructor Documentation

◆ opt()

◆ ~opt()

Member Function Documentation

◆ apply()

|

pure virtual |

Apply the optimizer to the layer parameters.

- Parameters

-

[in,out] W,B Learnable parameters [in] Wgrad,Bgrad Gradient of the learnable parameters

Implemented in dnn::opt_rmsprop, dnn::opt_adagrad, dnn::opt_adadelta, dnn::opt_adamax, dnn::opt_adam, dnn::opt_SGD_nesterov, dnn::opt_SGD_momentum, and dnn::opt_SGD.

◆ get_algorithm()

|

inlinevirtual |

Get the optimizer algorithm information.

- Returns

- Algorithm information string

Reimplemented in dnn::opt_rmsprop, dnn::opt_adagrad, dnn::opt_adadelta, dnn::opt_adamax, dnn::opt_adam, dnn::opt_SGD_nesterov, dnn::opt_SGD_momentum, and dnn::opt_SGD.

◆ get_learn_rate()

|

inline |

◆ set_learn_rate_alg()

Set learning rate algorithm.

- Parameters

-

[in] alg Algorithm [in] a Parameter a [in] b Parameter b

Sets the learning rate algorithm and the parameters CONST: constant learning rate lr = lr_0 TIME_DECAY: time based decay lr = lr_0/(1+at) STEP_DECAY: stepped decay lr = lr_0*(a)^(floor(b/t)) EXP_DECAY: eponential decreasing decay lr = lr_0*exp(-at)

◆ update_learn_rate()

|

inline |

Member Data Documentation

◆ alg

◆ it

◆ lr

◆ lr_0

◆ lr_a

◆ lr_alg

|

protected |

◆ lr_b

◆ reg_alpha

|

protected |

◆ reg_lambda

|

protected |

The documentation for this class was generated from the following file:

1.8.13

1.8.13